Transformer Language Model

Date: 23.06.27

Writer: 9tailwolf : doryeon514@gm.gist.ac.kr

Position Embedding

Position Embedding is one of the embedding technique that involved position in the sentence.

In language model based on RNN, by the structure, position information can get easily. But in attention without Seq2Seq, it is hard to get position information. by this reason, position embedding can be a key of transformer.

Word can represented as form of \((position, i)\), \(i\) is a embedding value. In position embedding, It can calculate by following method.

When \(i\) is even, \(PE(p,2i) = \sin{(\frac{p}{10000^{2i/d}})}\) otherwise, \(PE(p,2i+1) = \cos{(\frac{p}{10000^{2i/d}})}\)

Self Attention

Attention is consist of Query, Key, Value. By making \(A(Q,K,V)\) function to calculate Attention simply. For example, in scaled dot-product attention, \(A(Q,K,V) = \frac{Q^{T}K}{\sqrt{n}}V\). Self Attention is a method that use same query, key, and value. Each values can be formed like below.

Self Attention has a benefits. Self-attention can obtain a degree of similarity between words in an input sentence.

Multi-head Attention

Multi-head Attention is a method that make parallel attention. By connecting each attention with different value to get \(z = (z_{1},z_{2},z_{3},...,z_{N})\). And the result \(Z\) can be obtained by matrix multiplication with \(W_{0}\)

\(W_{0}\) can also update at learning.

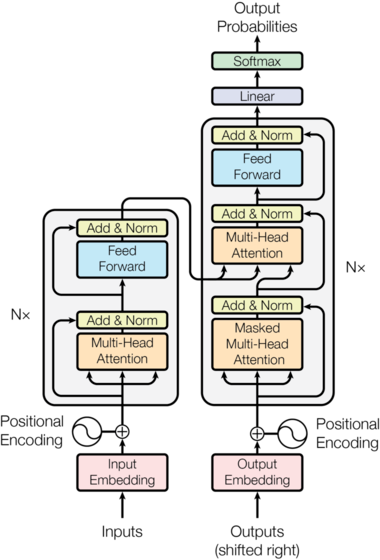

Transformer Language Model

Above process is a summary of

Transformer Language Model.- Encoder

- Embedding of Input Words

- Position Embedding of Input Words.

- Multy-head Attenction with Embedded Input Words Data(Self Attention).

- Feed Forward (This data will use in Decoder 4).

- Decoder

- Embedding of Output Words

- Position Embedding of Output Words.

- Multy-head Attenction with Embedded Output Words Data(Self Attention).

- Multy-head Attenction with Embedded Output Words Data with Value, And Result of Encoder 4 Data with Query and Key.

- Feed Forward

- Use Softmax Function to Find Qptimal Result.