Sentiment Classification with TextCNN

Date: 23.05.23

Writer: 9tailwolf : doryeon514@gm.gist.ac.kr

Introduction

CNN is a useful deep learning structure for Compiter Vision and Speech Recognition. However CNN revealed that not only useful for CV but it also useful with NLP. Espectially, CNN is effective with Sentence Classification problem.

CNN for Text Data

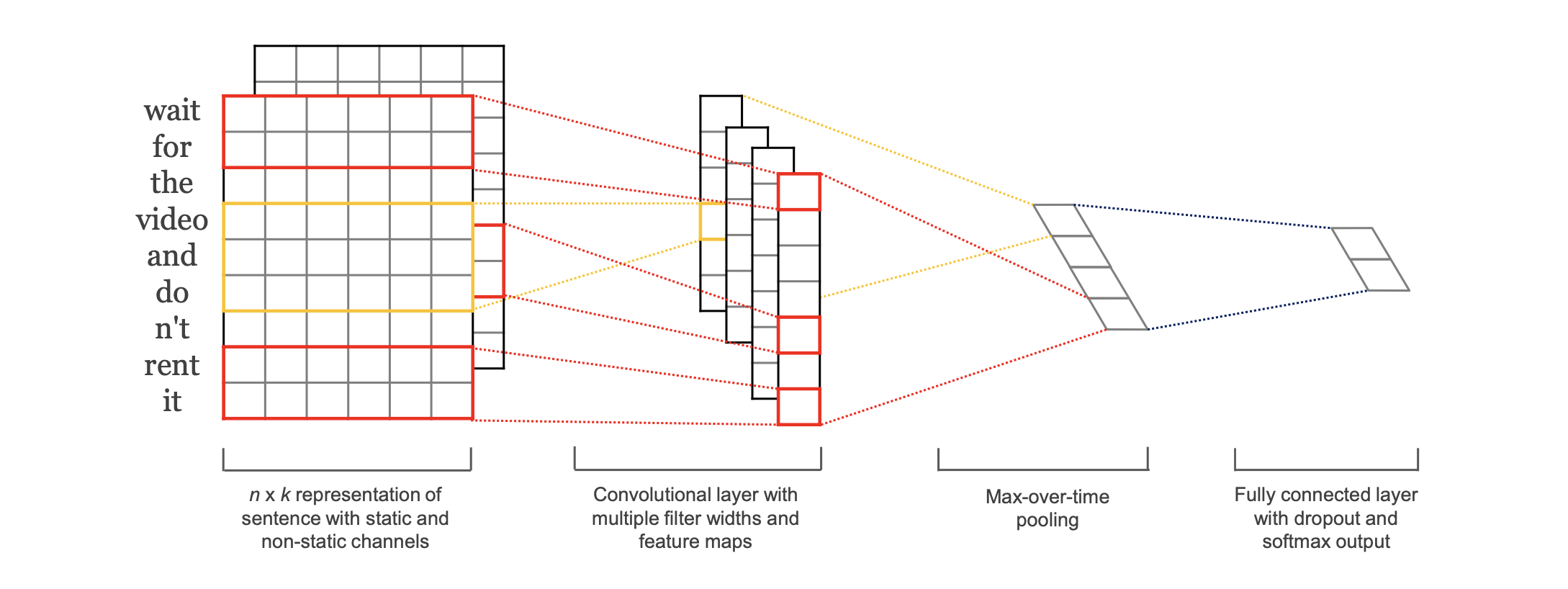

Above Figures are all process of TextCNN.

1. Embedding

Let \(x_{i} \in \mathbb{R^{k}}\). then sentence can be sequence of \(x_{i}\), \(\vec{x}_{1:n} = x_{1},x_{2},x_{3},...,x_{n-1},x_{n}\). And \(\vec{x}_{1:n} \in \mathbb{R}^{n,k}\) satisfy.

2. Filter

To apply CNN, we use \(W\) filter that satisfy \(W \in \mathbb{R}^{h,k}\). Below is a fomular that apply filter. \(b \in \mathbb{R}\) is a bias and \(f\) is a non-linear function.

3. Padding

By mapping each \(\vec{x}_{i:i+h-1}\) with filter, it can produce \(\vec{c}=[c_{1},c_{2},...,c_{n-h-1}]\). And we can make feature map to apply max-over-time pooling operation. It is a method that taking maximum value \(\hat{c}_{i} = \max \{ c \}_{i}\). And \(z\) can defined by below way.

4. Dropout

By appling Dropout, Model can prevent overfitting. Dropout is a method that make various model. The activation of backpropagation is determined by some probability. The output should be below. \(r\) is a Bernoulli random variable masking vector.